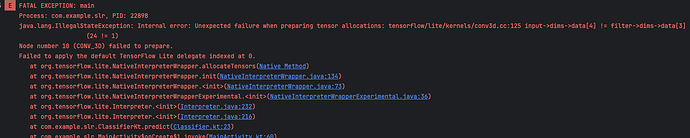

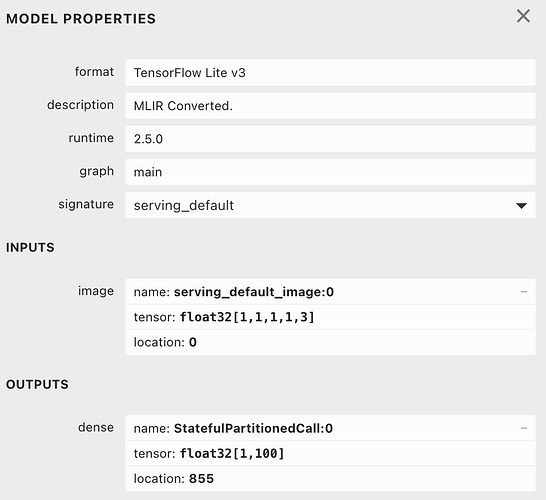

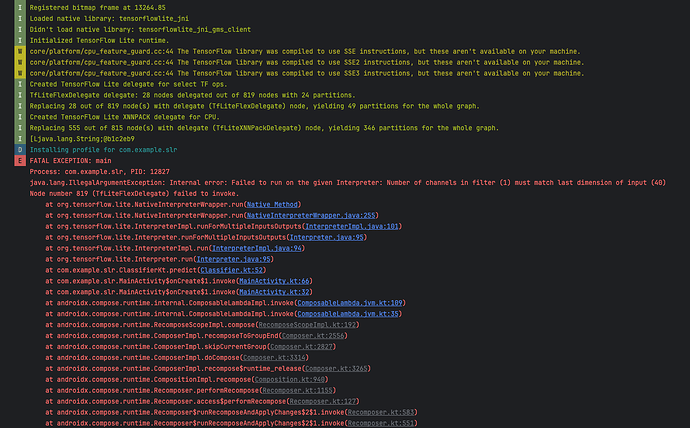

Hi:) I am trying to load a tflite model in my android app, but I get these two error messages:

This is how I converted the model:

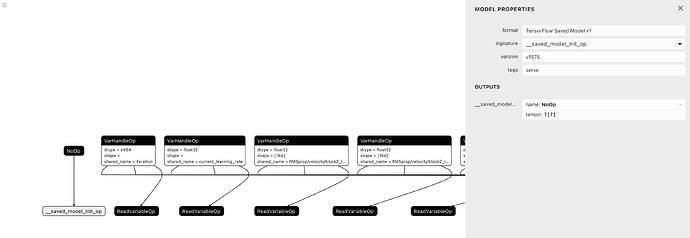

tf_model = tf.saved_model.load('Models/MoViNet/models/movinet_freez10_3')

converter = tf.lite.TFLiteConverter.from_keras_model(tf_model)

converter.target_spec.supported_ops = [

tf.lite.OpsSet.TFLITE_BUILTINS,

tf.lite.OpsSet.SELECT_TF_OPS

]

tflite_model = converter.convert()

open('Models/MoViNet/lite/model.tflite', 'wb').write(tflite_model)

This is how I try to load my model when it is located in assets folder:

try {

val tfliteModel = FileUtil.loadMappedFile(context, "modelCopy.tflite")

val tflite = Interpreter(tfliteModel)

} catch (e: IOException) {

Log.e("tfliteSupport", "Error reading model", e)

}

I have also tried this where the model is in a ml package (got the same error):

import com.example.slr.ml.Model

val model = Model.newInstance(context)

And I have these dependencies in my gradle file:

implementation("org.tensorflow:tensorflow-lite:0.0.0-nightly-SNAPSHOT")

// This dependency adds the necessary TF op support.

implementation("org.tensorflow:tensorflow-lite-select-tf-ops:0.0.0-nightly-SNAPSHOT")

implementation("org.tensorflow:tensorflow-lite-support:0.0.0-nightly-SNAPSHOT")

implementation("org.tensorflow:tensorflow-lite-metadata:0.0.0-nightly-SNAPSHOT")

Do any of you have an idea how to include the AvgPool3D operation or another way to fix this issue? (it is listed in supported core ops here)