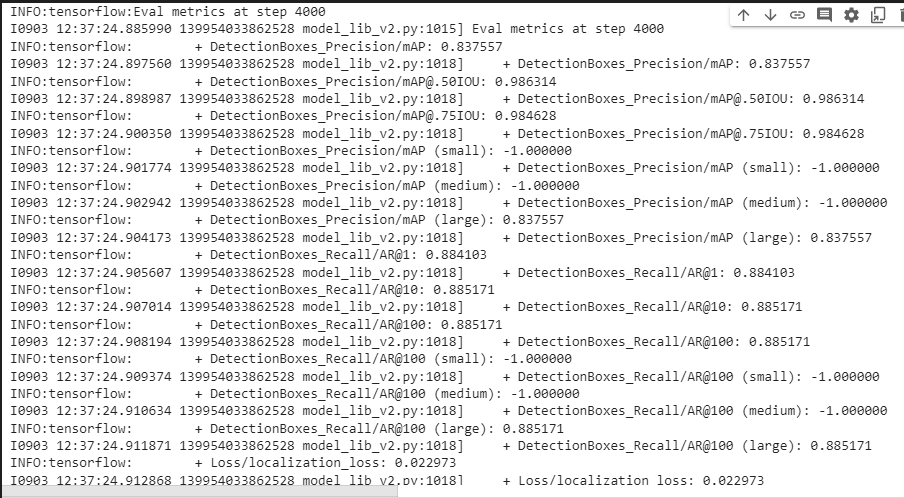

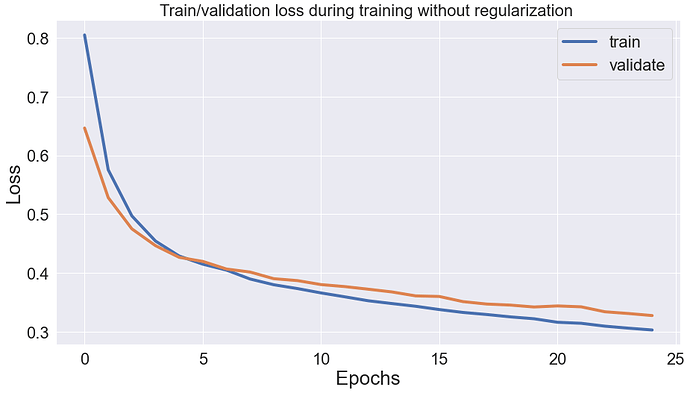

Ideal training lengths change wildly between different datasets and is a lot of trial and error. The usual practise is to set the length of the training hight (many steps) and monitor the training session and stop when overfitting begins to occur.

It looks like by training for 3000 steps, 4000 steps and 5000 steps and comparing your results you’re getting a good idea of where overfitting is occurring. I guess its important to remember, overfitting is where your model is trained too specifically on your data and is not general enough to be accurate on new data (ie, the model becomes too good at detecting for the trained images that any new data introduced is too incorrect for the model to be useful).

This article is quite good in explaining some of the common issues surrounding convergence. But it looks like you’ve found a good balance.

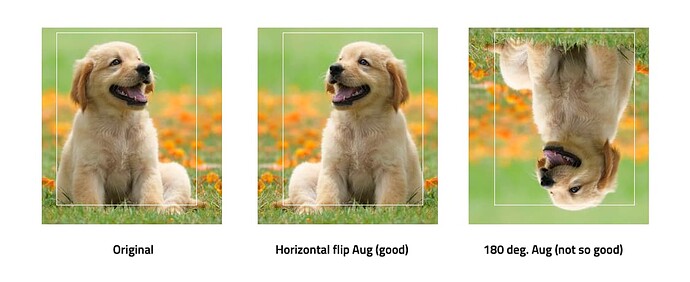

Regarding pipeline augmentation this is again up to the model trainer. Some people like to maintain full control of their augmentation and control it manually, some people don’t mind allowing the model/pipeline to do it.

The key point to focus on is what augmentation method the pipeline is using and what you are doing manually (ie are you flipping your images horizontally, vertically, rotating, adding distortion, warping etc.)

Its important to look at what you’re trying to detect and apply it to what is a realistic real use application of the model might be. For instance, if I was trying to detect a dog, it would make sense to augment my training data to flip horizontally, because there is a chance the dog could be both facing left and/or right. Rotating the image 180 degrees though wouldn’t be that handy, because most images of dogs aren’t going to be upside down. The picture below demonstrates that. Your lung scans may be different though, depending what the scans look like.

I guess the key thing to think about when augmenting images is to look at what you’re trying to detect and augment it in useful real world application ways. Balancing data is a very good idea as well, as much as you want your model to detect covid accurately you also want it to detect normal/no covid accurately as well.

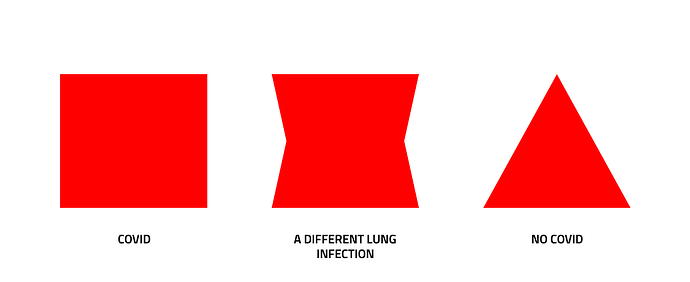

In regard to the question about ‘images of other lung issues’ this again will be about how you intend to use the model. If your use case is the scenario where the images either have covid or they are normal/no covid then this wouldn’t weigh into the equation, purely detecting covid may be enough. If however, your use case is all images of lungs are being analysed and they may have any range of different forms in them, it might make sense to try to differentiate between similar looking objects. An example of this is shown in the image below:

I’m not totally sure of the answer to this, and have heard competing logic around the problem. Telling the difference between a square (covid) and a triangle (no covid) is fairly straight forward, but what happens when its close to a square (a different lung infection). Depending where your threshold of positive identification sits it will have to pick to either be a square or a triangle (because they are the only two options it can pick from).

This is just something to think about for real world applications, whether this is what you’re actively pursuing or not is a different story, but it’s still worth thinking about as a theoretical dilemma for your model.

This also leads into the issue around null detections as well, and how to build them into your dataset. Another blog article by Roboflow on that is here, its worth a read if it’s not directly related to your model.

Some of this stuff may have nothing to do with your model, but its probably worth keeping it in mind when looking at your data and trying to understand how your model gets to where its going (if that makes sense).

INFO:tensorflow:{‘Loss/BoxClassifierLoss/classification_loss’: 0.016595816,

INFO:tensorflow:{‘Loss/BoxClassifierLoss/classification_loss’: 0.016595816,