I’ve been trying to get TF to see my GPU on Windows 10, with WSL2 installed. It looks like I’ve done everything on the installation documentation, but I still can’t get my GPU detected.

I’m using Anaconda, and TF is in a separate environment from root, but I had to install TF with pip since the version conda could fetch didn’t work with Python 3.11.

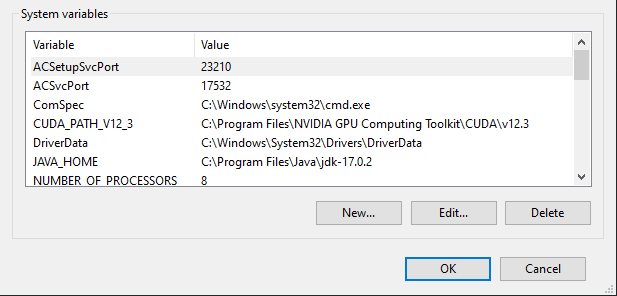

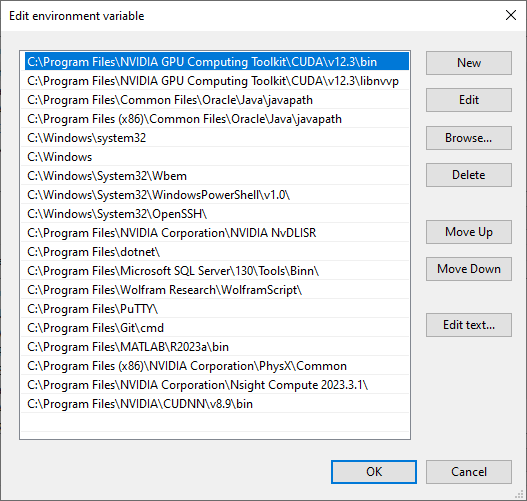

I’ve updated PATH with CUDA and cuDNN, have Ubuntu on WSL2, and I’ve followed Nvidia’s instructions on getting CUDA to work within WSL.

I’d started with CUDA Toolkit 11.8 to follow the TF docs to the letter, then decided it might be better if the toolkit matched the CUDA version the nvidia-smi command gives me. tf.config.list_physical_devices() only returns CPU.

CPU: Intel i7-11375H

GPU: RTX3070 Laptop

Nvidia driver version: 546.33

CUDA version: 12.3

WSL version: 2.0.9.0

Ubuntu version: 22.04.3 LTS

Python version: 3.11.5

TF version: 2.13.1

Windows version: 10 Home 22H2, build 19045.3803

Hi @Kurt_Ger, It is always recommended to use CUDA version as per the test build configuration i.e CUDA 11.8 for TF 2.13. Thank You.

I tried this first with CUDA Toolkit 11.8 and cuDNN 8.6.0 as was in the docs, then tried the upgrade when it didn’t work. Sorry if that didn’t come across in the post.

Would you recommend updating/reinstalling TF without the [and-cuda] suffix?

UPDATE: Ubuntu can see the GPU, but Windows still can’t. I tried to attach the screenshots I got running pip install tensorflow[and-cuda], and another calling tf.config.list_physical_devices('GPU') from the WSL shell, but I keep getting told I “can’t embed media items to a post”.

Why could this be happening? Does it have to do with Ubuntu fetching a different version of TF? Is Anaconda the problem? How can I start doing stuff with TF using GPU on Windows as somebody who Linux doesn’t want to befriend quite yet?

Hi Kurt,

Did you execute literally “pip install tensorflow[and-cuda]”, I tried it in Ubuntu (now I’m in Windows and can’t run it), but it didn’t work.

Is it possible for you to explain how you did it step by step? I can’t get my GPU being recognized in native Linux Ubuntu

Thanks!

“Success” means GPU was visible after import tensorflow as tf; tf.config.list_physical_devices(); “Failure” means it wasn’t.

-On Anaconda, separate environment from root, TF 2.15 was installed.

-As per install instructions, installed CUDA Toolkit 11.8 and cuDNN 8.6.

-Failure on Windows.

-Executed literally pip install tensorflow[and-cuda] on cmd with Anaconda environment activated, which downgraded TF to 2.13.1.

-Failure on Windows.

-Upgraded to CUDA Toolkit 12.3 and cuDNN 8.9; followed instructions on link Setup NVIDIA GPU support on WSL2

-Failure on Windows.

-Installed WSL2 with Ubuntu 22.04.3 LTS.

-On Ubuntu/from wsl.exe, executed literally pip install tensorflow[and-cuda], which installed TF 2.15.

-Success on WSL2-Ubuntu.

-Failure on Windows.

What I didn’t realize was that VS Code has a WSL extension. Upon installing it, selecting “Connect to WSL” under VS Code’s remote options, and some configuration (extensions for Python etc) to work under Ubuntu, I can now train models using my GPU (I tried it on some old coursework, and it varies from “actually slower” to “twice as fast” depending on the model).