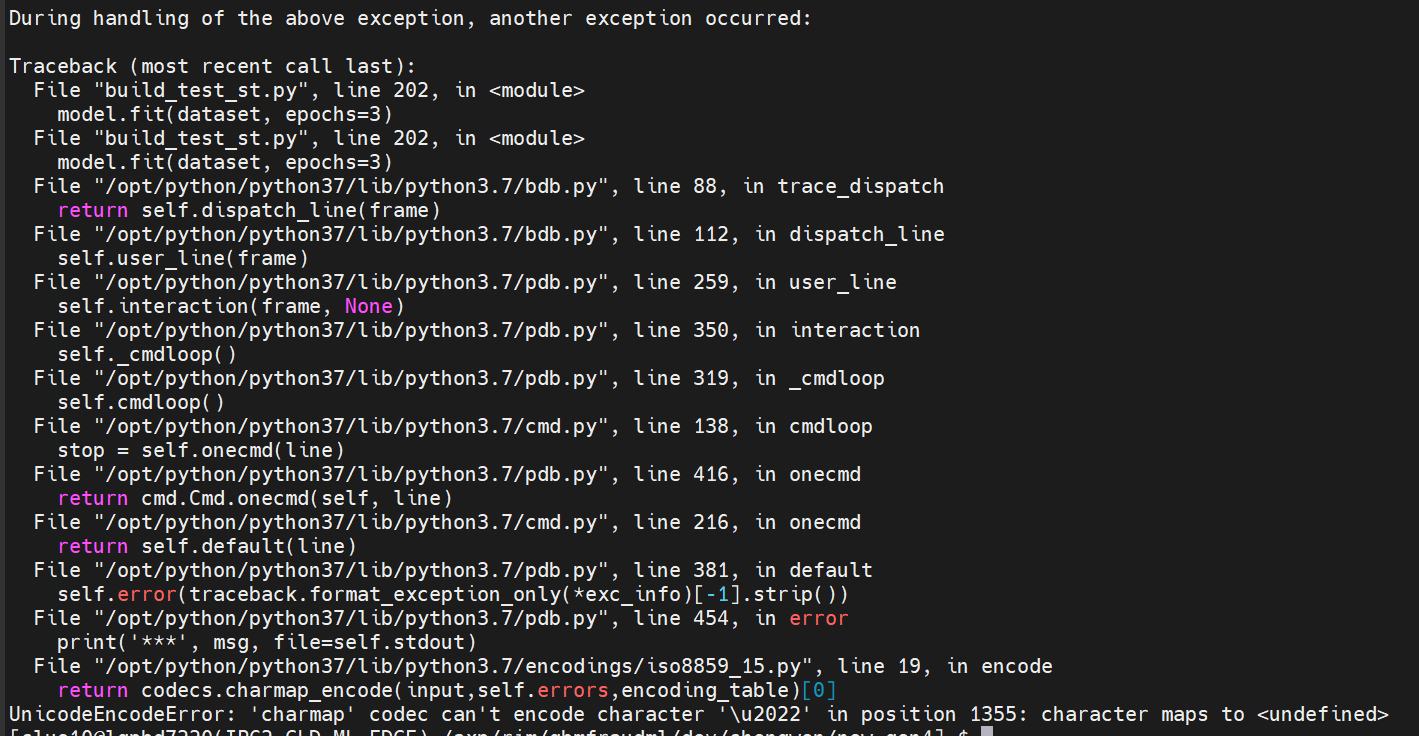

The following code has the following error. I believe it is related to the tf.data.Dataset, the error is like this:

from keras.models import Sequential

from keras.layers import Dense, Dropout, LSTM, BatchNormalization,Flatten

from keras.optimizers import adam

import tensorflow as tf

import numpy as np

a = np.array([[1,2,3,4,4,5,61,2,3,4,4,5,6],[1,2,3,4,4,5,61,2,3,4,4,5,6],[1,2,3,4,4,5,61,2,3,4,4,5,6],[1,2,3,4,4,5,61,2,3,4,4,5,6]])

y = np.array([[1],[1],[1],[1]])

model = Sequential([

Dense(20, activation="relu"),

Dense(100, activation="relu"),

Dense(1, activation="sigmoid")

])

print(np.shape(a))

def generator_aa(a, y, batch_size):

while True:

indices = np.random.permutation(len(a))

for i in range(0, len(indices), batch_size):

batch_indices = indices[i:i+batch_size]

yield a[batch_indices], y[batch_indices]

my_opt = adam(learning_rate=0.01)

model.compile(loss='binary_crossentropy', optimizer=my_opt, metrics=['accuracy'])

model.fit(dataset, epochs=3)

not sure whyyyyyy