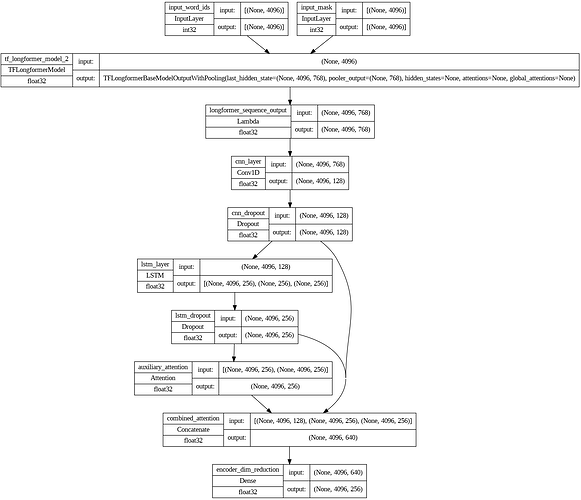

The picture depicts my encoder flow. Also, when i run my decoder, i keep crashing with the same error -

ValueError: Graph disconnected: cannot obtain value for tensor KerasTensor(type_spec=TensorSpec(shape=(None, 4096), dtype=tf.int32, name=‘input_word_ids’), name=‘input_word_ids’, description=“created by layer ‘input_word_ids’”) at layer “tf_longformer_model_2”. The following previous layers were accessed without issue: []. I have checked the encoder flow and any disconnections near the layer input_word_ids and tf_longformer_model. However not able to do the same for my decoder_model as it keeps getting stuck with the same error. The decoder structure is - `from tensorflow.keras.layers import TimeDistributed, Lambda, Add

# Decoder

decoder_dim = 256

# Decoder

decoder_inputs = Input(shape=(None,), dtype=tf.int32, name='decoder_inputs')

coverage_input = Input(shape=(max_length,), dtype=tf.float32, name="coverage_input")

initial_h = Input(shape=(256,), name='initial_h')

initial_c = Input(shape=(256,), name='initial_c')

# Embedding layer for the decoder

decoder_embedding = Embedding(input_dim=vocab_size, output_dim=decoder_dim, name='decoder_embedding')

decoder_embedded = decoder_embedding(decoder_inputs)

# Decoder LSTM

decoder_lstm = LSTM(decoder_dim, return_sequences=True, return_state=True, name='decoder_lstm')

decoder_lstm_out, _, _ = decoder_lstm(decoder_embedded, initial_state=[state_h, state_c])

# Attention Layer

attention_layer = Attention(use_scale=True, name='attention_layer')

attention_out, attention_weights = attention_layer([decoder_lstm_out, encoder_dim_reduction], return_attention_scores=True)

# Coverage mechanism: update the coverage vector

coverage_update_layer = Add(name="coverage_update")

updated_coverage = coverage_update_layer([coverage_input, Lambda(lambda x: tf.reduce_sum(x, axis=-1))(attention_weights)])

# Reducing the dimensionality of the coverage to match the attention output

coverage_projection = TimeDistributed(Dense(decoder_dim), name="coverage_projection")(updated_coverage[:, tf.newaxis])

# Combine LSTM output, attention output, and coverage vector

decoder_concat_input = Concatenate(axis=-1, name="decoder_concat_input")([decoder_lstm_out, attention_out, coverage_projection])

# Dense layer to generate probabilities over the vocabulary

output_layer = TimeDistributed(Dense(vocab_size, activation='softmax'), name='output_layer')

decoder_outputs = output_layer(decoder_concat_input)

# Pointer-generator layer for copy mechanism

pointer_generator = Dense(1, activation='sigmoid', name="pointer_generator")(decoder_concat_input)

# Final Decoder Model

decoder_model = Model(

inputs=[decoder_inputs, initial_h, initial_c, coverage_input],

outputs=[decoder_outputs, updated_coverage, pointer_generator],

name='decoder_model'

)

decoder_outputs, updated_coverage, pointer_generator = decoder_model(

[decoder_inputs, state_h, state_c, coverage_input]

)

# Print the summary to inspect the model architecture

decoder_model.summary()

`

Please look into this and give me any suggestions or inputs I can do to rectify this. Have been stuck at this for a week now.