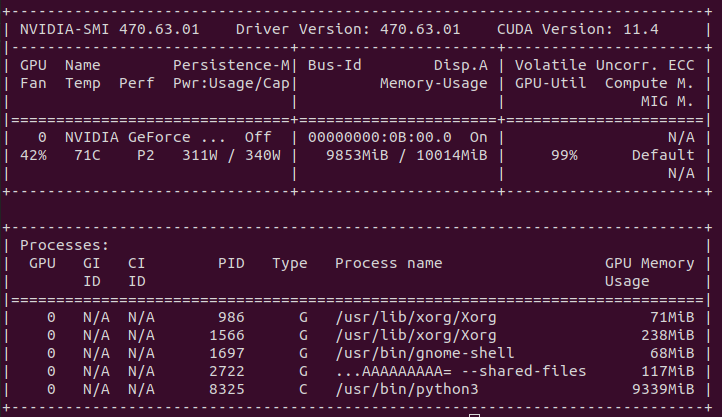

Hello everyone, I have a 10GB 3080RTX GPU, NVidia-smi reports 10014MiB memory, Tensorflow reports:

Created device /job:localhost/replica:0/task:0/device:GPU:0 with 7591 MB memory

After initial research I was convinced that this is related to Windows 10 OS limitations, so I installed Ubuntu 20.04 in dual boot. It didn’t change anything, I tried various versions of Tensorflow, Cuda, Cudnn:

- Tensorflow: 2.5.0, 2.5.1, 2.6.0

- Cuda Toolkit: 11.2, 11.4

- CuDNN: 8.1.0, 8.1.1, 8.2.4

- Python: 3.8.10

I tried using:

physical_devices = tf.config.list_physical_devices('GPU')

for gpu_instance in physical_devices:

tf.config.experimental.set_memory_growth(gpu_instance, True)

It didn’t fix the problem. Also, I tried:

from tensorflow.compat.v1 import ConfigProto

from tensorflow.compat.v1 import InteractiveSession

config = ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = 1.0

session = InteractiveSession(config=config)

And indeed, TensorFlow started to report proper full 10GB of memory in ‘Created device’ message, so tf should see the memory properly. With this method I was able to push memory to something like 8GB and it even allowed me to run slightly higher batch size. But, if I specify fraction of more than 0.8 (it may slightly vary from run-to-run) than i have:

2021-09-26 12:48:26.691479: F tensorflow/core/util/cuda_solvers.cc:115] Check failed: cusolverDnCreate(&cusolver_dn_handle) == CUSOLVER_STATUS_SUCCESS Failed to create cuSolverDN instance.

One important thing to note, is that while TensorFlow is reporting a device with ~7.5GB, in nvidia-smi it is reporting more than 9GB by /usr/bin/python3! I am not running any other Python script in parallel.

So, the memory usage in reality is reaching its limits while I am able to use only 7.5GB, which is even less than known 81% limitation for Windows 10 users! Why am I being allocated almost extra 2GB on top which I can’t use?

I was trying to fix it for a long time and really don’t have any idea what to do now. Other people’s problems with missing tf memory that I found on Internet were related to Windows OS, mine is not. Am I missing something? I would really appreciate any idea on what is going on.

Thank you in advance.